Cars mean more than traveling from point A to point B. They are also directly or indirectly linked to one's personal freedom – where to go, when to leave, with whom to travel - and have become a reflection of our priorities, tastes, aspirations, who we are (more often it's what we want to project ourselves to be), etc. This is why cars come in thousands of variations in color, size, and equipment. Although the definition of an average car is subjective and not representative, facts can be found in numbers and a good example is the most sold car model of 2023 that totals with all its color, equipment, or subscription (hint) variants barely up to 1.25% of the global market.

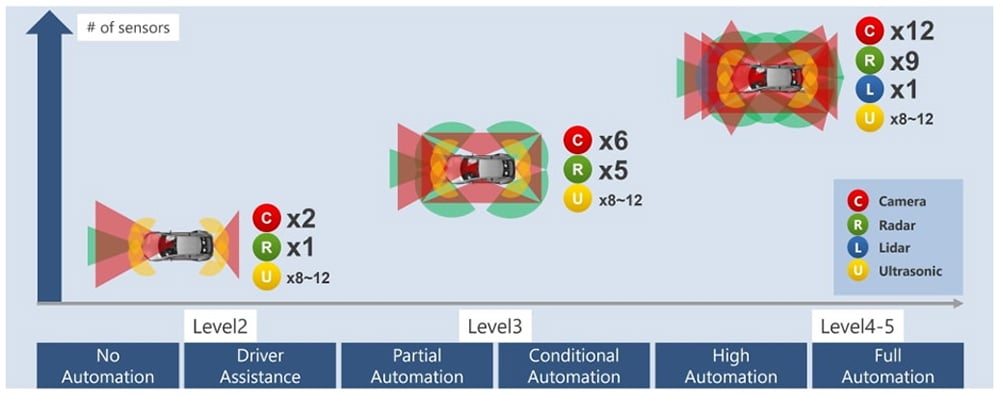

On the other hand, 99.99% of cars have common basics such as four wheels but only one steering wheel since 1890. In my lifetime they all now have seatbelts, airbags, Anti-lock Braking Systems (ABS), and Electronic Stability Control (ESC), and proudly with my personal (but insignificant) involvement, all cars will very soon have an array of sensors along with the Advanced Driver-assistance Systems (ADAS) features that are required in the first place. Already today in 2024, two-thirds of new vehicles are sold in markets where features like Autonomous Emergency Braking (AEB) and Traffic Sign Recognition (TSR) are mandated by law(s) or industry commitments.

Why More Powerful Processing Units Are Required?

Technically, that translates into a requirement to have at least one camera sensor and a corresponding processing unit. Looking forward to a handful of years with more features expanding the list of mandatory equipment like a rearward-looking camera and driver monitoring which of course are already common today.

Thus, in parallel to the "greatest & latest" ADAS systems that monopolize media attention, the most significant volume share in the automotive market today comes from a necessity rather than driven by tech-savvy customers. This created a gulf between "basic" systems fulfilling the much-needed safety features versus the premium "state-of-the-art" technology - the first being very cost-sensitive and continuously defined by the different array of standards, regulations, and NCAP scenarios.

Jumping into the technical side of things, such basic systems have been covered in the last decade with features dispatched in satellite sensors like:

- AEB, TSR, Lane Keep Assist (LKA), and such in a front camera located behind the windshield

- Driver monitoring systems with a dedicated interior camera and its ECU

- A front radar and/or corner radar

- Dedicated cameras for surround view

- Ultra-sonic systems for parking assist

Data from all these sensors is combined in what is referred to as "fusion" which gives a better understanding of the vehicle's surroundings. Many of the recent innovations involve how this fusion is made: Fusion has been made using processed sensor data (i.e., detected objects and their tracking info like relative position and speed), while state-of-the-art systems today use a fusion with the raw sensor data to construct a more detailed understanding of the vehicle environment. That's why BEV in automotive no longer only means Battery Electric Vehicle but also a Bird's-Eye-View which is now a trend in how perception data is used in ADAS and AD because BEV transformation (the BEV acronym would from now in this blog be a reference to Bird's-Eye-View) offers a better understanding of the surrounding infrastructure, vehicles, and obstacles.

Fusion can only take place in a centralized architecture where the sensor processing is being done in one processing unit, in contrast with a satellite architecture which is commonly used so far where sensors process their data and send the final object tracking result for a simpler fusion. In addition to enabling more sophisticated functions, centralized processing and its tightly coupled centralized E/E architecture are essential for a software-defined vehicle and thus inescapable in the very near future.

How Would That Impact The Vehicle Cost?

Supporting and processing all the sensors' data requires considerably more processing power, and that translates directly and indirectly to an increase in vehicle cost:

- Directly, more powerful processing units are more expensive, and more resources like memory chips are added to the bill of materials.

- Indirectly, the increase in power consumption adds to the vehicle cost whether in cooling devices, larger copper wires, or even a bigger battery to keep the same range.

Even if this cost increase is partially mitigated by savings on wiring with much shorter cable length used per vehicle, the bottom line at least until the end of this decade is that anyone who is looking for an affordable car is seeing its cost increase regardless of whether optional driving assistance features are added.

How Do Renesas R-Car Gen 4 SoCs Help to Minimize This Cost Increase?

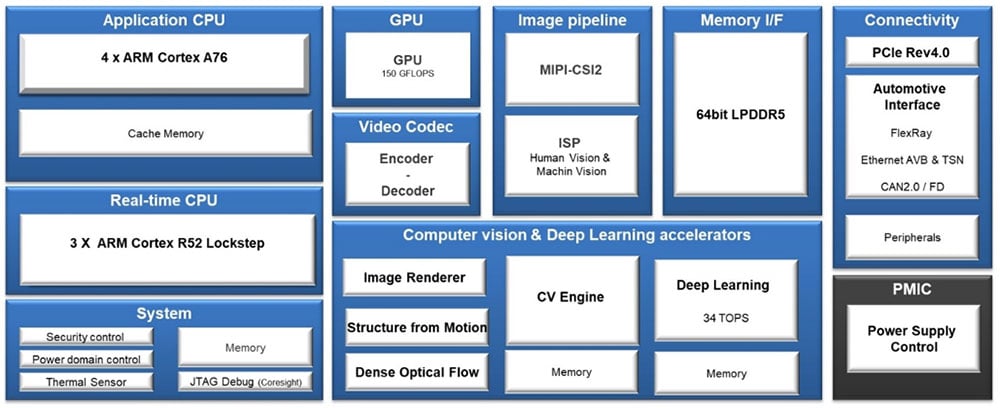

Renesas anticipated this centralization trend and designed its 4th generation of automotive SoCs to be able to:

- Support such a full array of sensors even with the smallest chip.

- Safely and efficiently process the sensors ensuring proper isolation and freedom of interference, enabling independent inputs to remain independent, with an easily manageable power consumption. Visit my previous blog, "How to Build Together a Safe and Efficient AD & ADAS Central Computing Solution" for more details.

- Offer a scalable software and hardware-compatible lineup so that the "best-fit" solution can be selected even later in the project with more than a handful of chips from the R-Car Gen 4 series.

OEMs can build a cost-optimized, highly efficient L2/L2+ solution with tens of US$ savings per vehicle compared to other market solutions based on the following merits that Renesas R-Car Gen 4x SoCs bring:

- Designed for automotive applications since Day 1 including lifetime target and operation range.

- True ASIL-D SoC with state-of-the-art freedom of interference mechanisms with no need for any external MCU.

- Support the latest automotive camera sensors in the market.

- Optimized power consumption for example: <10W for 2x cameras + 5 radars + 12 USS or 12W for 6x cameras + 5x radars + 12 USS.

- Renesas' AI Hybrid Compiler tool

- Renesas' open "white-box" solution along with solutions already available off-the-shelf from many R-Car Consortium partners

- Optimized BOM with Renesas' power supply control (PMIC)

Renesas R-Car SoCs continue to expand our portfolio to support cost-competitive ADAS ECU developments. The product scalability would be handy when aiming to offer vehicles that meet global mandates (#ENCAP26 #IIHS25 #UN-R152) with uncompromised drivable range and easy to upgrade with on-order or subscription-based features/options.

Learn more about the first series of our R-Car V4x family and watch out for upcoming extensions of our V4x family soon.