Making the Endpoint Intelligent

The Internet of Things (IoT) has transformed the fabric of the world into a smarter and more responsive one by merging the digital and physical universes into one. Over the past few years, the IoT has exhibited exponential growth across a wide range of applications. According to a McKinsey study, the IoT will have an economic impact of $4 - $11 trillion by 2025. The edge continues to become more intelligent, and vendors are racing to support more connected and smart endpoint devices.

Backed by secure cloud infrastructure, smart connected devices offer many advantages which include the cost of ownership, resource efficiency, flexibility, and convenience. However, the process of transferring data back and forth from a device to the cloud results in additional latency and privacy risks during the data transfer. This is generally not an issue for non-real-time, low-latency applications, but for businesses that rely on real-time analytics with a need to quickly respond to events as they happen, this could end up in a major performance bottleneck.

Imagine an industrial plant where the use of real-time data analytics and intelligent machine-to-sensor communication can significantly optimize the overall operations, logistics and supply chain. Data generated from such industrial sensors and control devices would be particularly beneficial for factory operators as it could enable them to overcome any challenges by pre-empting anomaly detection, prevent costly production errors and above all make the workplace safe.

This presents a real need to perform localized machine learning processing and analytics that would help reduce latency for critical applications, prevent data breaches, and effectively manage the data being generated by IoT devices. The only way to accomplish this is to bring the computation of data closer to where it is collected, namely the endpoint, rather than sending that data all the way back to a centralized cloud or datacentre for processing.

Combining high-performance IoT devices with ML capabilities has unlocked new use cases and applications that resulted in the phenomenon of Artificial Intelligence of Things. The possibilities of AIoT — AI at the edge — are endless. For instance, visualize hearing aids that utilize algorithms to filter background noise from conversations. Likewise envision smart-home devices that rely on facial and vocal recognition to switch to a user’s personalized settings. These personalized insights, decisions and predictions are a possibility because of a concept called Endpoint Intelligence or Endpoint AI.

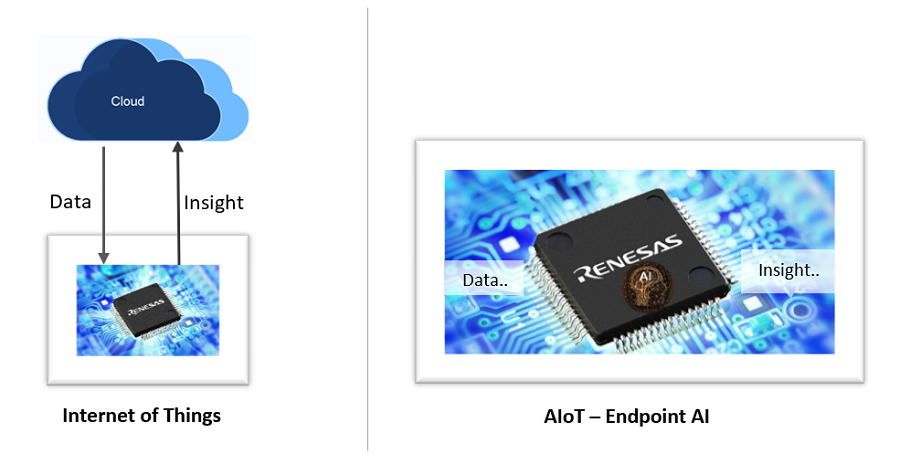

Endpoint AI is a new frontier in the space of artificial intelligence which brings the processing power of AI to the edge. It is a revolutionary way of managing information, accumulating relevant data, and making decisions locally on a device. Endpoint AI employs intelligent functionality at the edge of the network. In other words, it transforms the IoT devices that are used to compute data into smarter tools with AI features. This equips them with real-time decision-making capabilities and functionalities. The goal is to bring machine learning based intelligent decision-making physically closer to the source of the data. As illustrated below, pre-trained AI/ML models can now effectively be deployed at the endpoint enabling higher system efficiency vs. traditional cloud-connected IoT Systems.

Traditional IoT vs Artificial Intelligence of Things

Endpoint AI basically uses machine learning algorithms that run on local edge devices to make decisions without having to send information to cloud servers (or at least reduce how much information is sent).

With the vast amount of real-time data collected from IoT devices, intelligent machine learning algorithms are the most efficient way to get valuable insights from the data. However, these machine learning algorithms can be complex as they require higher compute power and a larger memory. Furthermore, the time frame required to identify patterns and make accurate decisions in huge datasets can be quite lengthy.

In the past, the ability to adopt efficient machine learning algorithms on constrained devices like a microcontroller was simply unimaginable, but this is now possible with advancements in the TinyML space. TinyML has been a game-changer for many embedded applications as it allows users to run ML algorithms directly on microcontrollers. This enables more efficient energy management, data protection, faster response times, and footprint-optimized AI/ML endpoint-capable algorithms.

Additionally, the new generation of multi-purpose Microcontrollers now offers sufficient compute power, intelligent power-saving peripherals, and most importantly, robust security engines that enable the mandatory privacy of data within the device. This allows for new applications in the AIoT space as well as new types of data processing, latency, and security solutions that can operate offline as well as online.

Let's look briefly into the advantages of Endpoint AI.

Privacy and Security - A Prerequisite

At the heart of effective endpoint AI is data collection and analysis — often in environments where privacy and security are paramount concerns due to some regulations or business needs.

Endpoint AI is fundamentally more secure. Data isn’t just sent to the cloud - it is being processed right in the endpoint itself. According to the F-Secure report, IoT endpoints were the “top target of internet attacks in 2019” and another study suggests that IoT devices experience an average of 5,200 attacks per month. These attacks mostly arise due to the transfer and flow of data from IoT devices into the cloud. Being able to analyze data without moving it outside its original environment provides an added layer of protection against hackers.

Efficient Data Transfer

Centralized processing of data entails that data be relocated from its source to a centralized location where it can be analyzed. The time spent transferring the data can be significant and poses a risk for inaccurate results especially if the underlying circumstances have changed considerably between collection and analysis.

Endpoint AI transmits data from devices, sensors, and machines to an edge data center or cloud, significantly reducing the time for decisive actions and increasing the efficiency of the transfer, processing, and results.

Some processing can be done on distributed sources (edge devices) effectively reducing network traffic, improving accuracy, and reducing costs.

Minimal wait time

A latency of 1,500 milliseconds (1.5 seconds) is the limit for an e-commerce site to achieve a similar user experience as a brick-and-mortar store. Users will not tolerate such a delay and they will leave, resulting in lower revenues. With Endpoint AI, latency is reduced by transforming data closer to where it is collected. This enables software and hardware solutions to be deployed seamlessly, with zero downtime.

Reliability When it Matters

Another key advantage of endpoint AI is reliability as fundamentally it is less dependent on the cloud, improving overall system performance and reducing the risk of data loss risk.

Endpoint AI ensures that your information is always available, and never leaves the edge, allowing independent and real-time decision-making. The decisions must be accurate and done in real time. The only way to achieve this is to implement AI at the edge.

All in One Device

Endpoint AI offers the ability to integrate multimodal AI/ML architectures that can help enhance system performance, functionality and above all safety. For example, a voice + vision functionality combination is particularly well suited for hands-free AI-based vision systems. Voice recognition activates objects and facial recognition for critical vision-based tasks for applications like smart surveillance or hands-free video conferencing systems. Vision AI recognition may also be used to monitor operator behavior, control critical operations, or manage error or risk detection across a number of commercial or industrial applications.

A Sustainable and viable approach

Integrating AI and ML capabilities with high-performance on-device compute has opened up a new world of possibilities for developing highly sustainable solutions. This integration has resulted in portable, smarter, energy-efficient, and more economical devices. AI can be harnessed to help manage environmental impacts across a variety of applications e.g., AI-infused clean distributed energy grids, precision agriculture, sustainable supply chains, environmental monitoring as well as enhanced weather and disaster prediction and response.

Renesas is actively involved in providing ready-made AI/ML solutions and approaches as references within various applications and systems. Together with its partners, Renesas offers a comprehensive and highly optimized AI/ML end-point capable solution both from the hardware as well as from the software side. Fulfilling hereby all the attributes that need to be considered as a necessity from the very beginning.

The impact of AI isn’t just in the cloud; it will be everywhere and in everything. Localized on-device intelligence, reduced latency, data integrity, faster action, scalability, and more are what Endpoint AI is all about, making the opportunities in this new AI frontier endless.

So now is the time for developers, product managers, and business stakeholders to take advantage of this huge opportunity by building better AIoT systems that would solve real world problems and generate new revenue streams.

Find information on products and technologies to help you implement AI solutions at renesas.com/AI.