PCIe2 to S-RIO2 Evaluation Board

The IDT Tsi721-16GEBI Evaluation Board is a prototyping platform that leverage’s IDT’s Tsi721 PCIe® to RapidIO® Gen2 bridge as well as the CPS-1432 RapidIO Gen2...

The Tsi721 converts from PCIe to RapidIO and vice versa and provides full line rate bridging at 20 Gbaud. Using the Tsi721 designers can develop heterogeneous systems that leverage the peer to peer networking performance of RapidIO while at the same time using multiprocessor clusters that may only be PCIe enabled. Using the Tsi721, applications that require large amounts of data transferred efficiently without processor involvement can be executed using the full line rate block DMA+Messaging engines of the Tsi721.

Learn more: IDT RapidIO Development Systems

The IDT Tsi721-16GEBI Evaluation Board is a prototyping platform that leverage’s IDT’s Tsi721 PCIe® to RapidIO® Gen2 bridge as well as the CPS-1432 RapidIO Gen2...

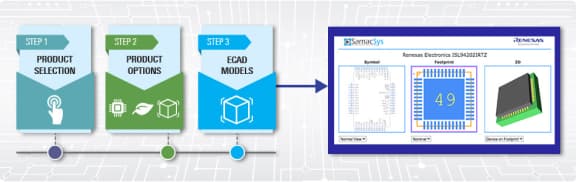

Schematic symbols, PCB footprints, and 3D CAD models from SamacSys can be found by clicking on products in the Product Options table. If a symbol or model isn't available, it can be requested directly from the website.

Applied Filters:

Description

Transcript