In recent years, various new functions have been implemented in vacuum cleaners of all types and models. One critical feature is floor type detection, which helps maintain consistent operation in a number of situations and can provide many benefits, including:

- Reduced power consumption

- Easy operation for users

- Motor noise reduction

Power consumption is a key consideration for all battery-operated models but is especially critical in robot cleaners.

Approach to detect floor type

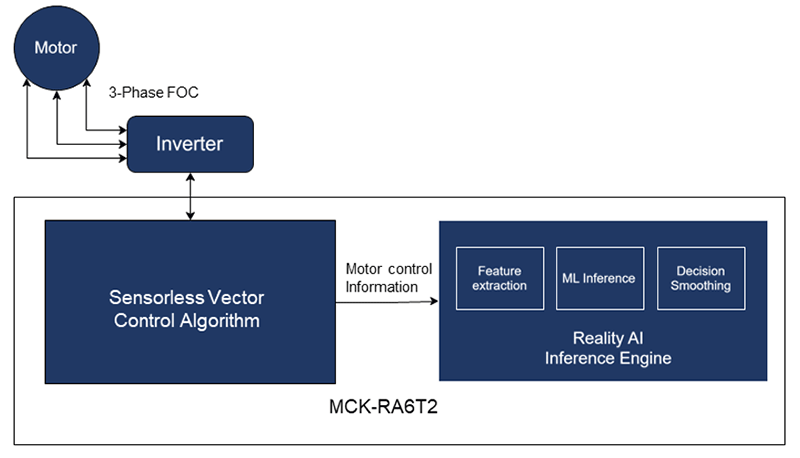

Brushless DC motors have captured more of the market due to the improvements in cost, maintenance, and noise to operate the brush on the head. Costs to implement an inverter or MCU to drive BLDC motors are getting cheaper and cheaper. Here, we introduce a typical use case employing a BLDC motor with sensorless floor type detection.

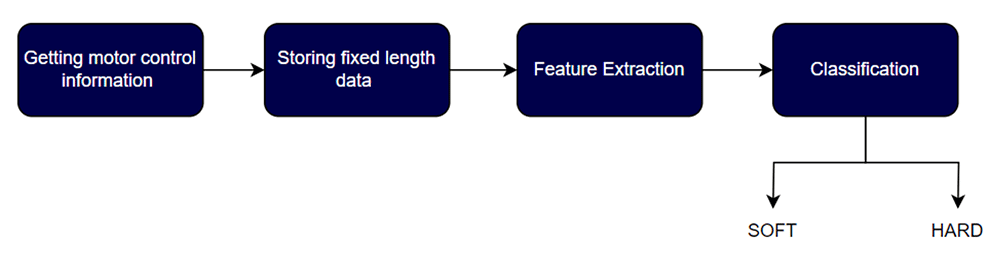

- Getting motor control information: Sharing feedback information from the shunt register with motor control logic

- Storing fixed-length data: Getting the decision window of data

- Feature Extraction: System extracts specific features from the motor control information

- Classification: After extraction, a classifier is used to classify the floor type

By using this implementation, the BOM cost can be significantly reduced as no additional sensor is required.

Application example from Renesas

The Renesas floor type detection solution is engineered for speed and responsiveness while maintaining high accuracy. We leverage hardware across the RA and RX MCU platforms with minimum BOM cost. In this solution, we are using an RA6T2 MCU.

In the Proof of Concept (PoC) unit, our model classifies two floor types: soft and hard. By adding training data, you can easily increase the number of floor types to be classified.

Model size in this use case:

Parameters: 2678 bytes

Stack usage: 2560 bytes

Pre-allocated: 12 bytes

Code: 2008 bytes

Inference time is roughly 1mS to 2mS with the RA6T2 MCU

How did we create the application example?

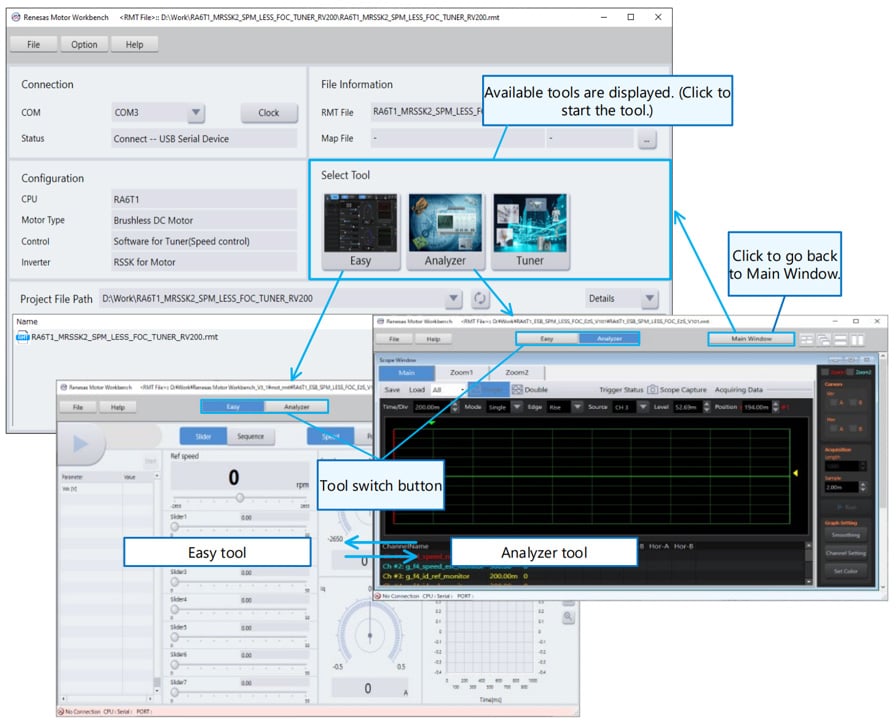

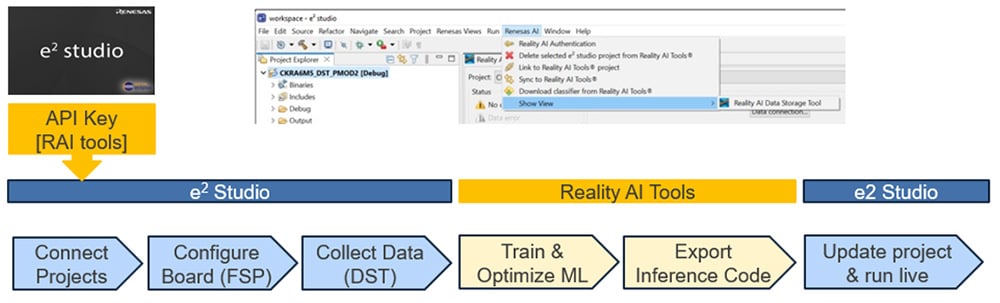

Renesas is introducing various types of motor control solutions with software examples. Utilizing the Renesas e² studio IDE with the Motor Workbench development support tool, a user can optimize the motor parameter, collect the data, integrate with other features required for the vacuum cleaner, and finally integrate any AI models generated using the Reality AI Tools® module.

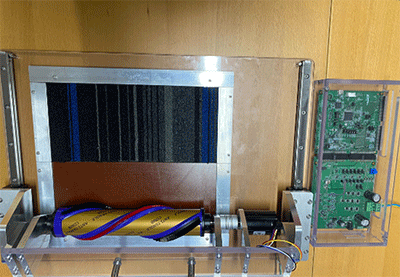

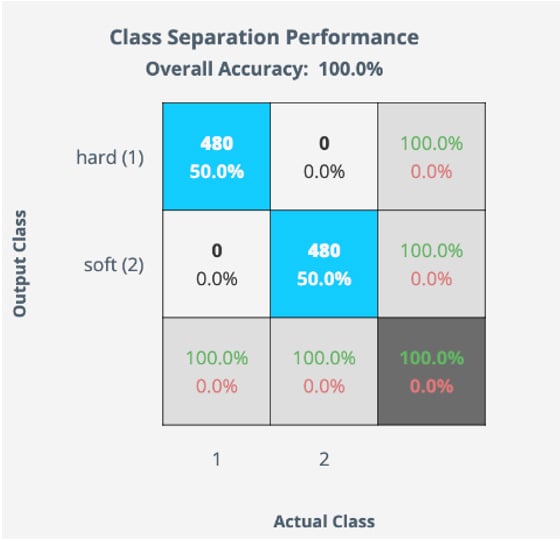

We collected real motor control data by sharing existing variables in the motor control sample code and recorded the data across targeted floor types to classify. This data was fed to Reality AI’s feature extraction and training engine to develop and output a model. We achieved 100% training K-Fold accuracy which prompted us to select the model for live testing and benchmarking. Simultaneously, the Reality AI BOM optimization feature proposes the best combination of information from tens of existing variables to use from the motor information and minimize the resource requirements.

The model can be tested using another recording data set that was not used for training for confirmation on Reality AI before implementing it on real hardware to reduce the developing effort. Once it achieved the expected accuracy, the model was then integrated back into the e² studio project. This model was then extensively tested in live settings.

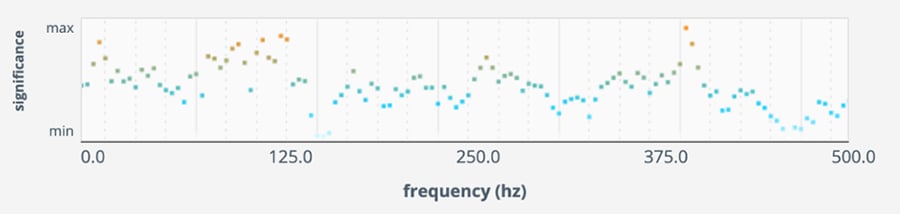

To productize the machine learning model, sometimes it is required to understand how the machine learning model is working and how it is determining the result to avoid the black-box situation. Reality AI also gives the solution such cases and can show which features are important and which are less important using the decision significant graph.

In case of further or future enhancement, Renesas also provides a way to re-train the model. The model created by Reality AI Tools can easily be improved by simply updating the machine learning model parameters. Those parameters can be placed in a separate area of flash memory, like the data area, and can be updated very easily via over-the-air (OTA).

Conclusion

The floor type detection example demonstrates the capability of Renesas Reality AI Tools to address the real-world challenges of improving the user experience and enhancing vacuum systems with additional features. Our AI models have a small footprint and the flexibility to expand by utilizing extensive data collection.

For more information, including videos and documentation or to request a demo, please visit renesas.com/reality-ai-tools.