Overview

Description

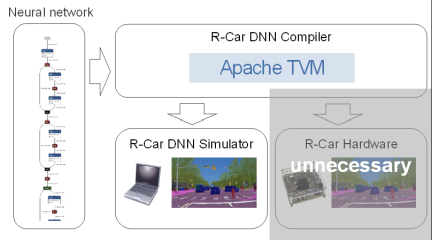

High-speed simulator for deep learning model programs for R-Car

The deep learning inference process is computationally intensive and memory-intensive. Operations need to be made more efficient in order to perform real-time processing with an in-vehicle SoC such as R-Car. One way to improve this efficiency is to use the weights and other parameters of the deep learning model after quantizing them (converting them to integer operations). By performing integer operations, there will be a slight difference in recognition results between your neural network model and the actual hardware output. You can use the R-Car DNN Simulator to confirm the differences in recognition results, even if you don't have the hardware available. Unlike with conventional instruction set simulators (ISS), this does not reproduce each hardware instruction, but only the output data, making it about 10 times faster than ISS and allowing it to be used to evaluate the accuracy of a large volume of images. In addition, it can also be used for debugging to improve accuracy because the output of each layer can be checked.

Target Devices

Videos & Training

News & Blog Posts

| Renesas Strategy for Automotive Software | Blog Post | Jan 31, 2023 |

| Introduction of R-Car DNN Simulator | Blog Post | Dec 20, 2022 |

| Renesas and Fixstars to Jointly Develop Tools Suite that Optimizes AD and ADAS AI Software for R-Car SoCs | News | Dec 15, 2022 |