AI-based applications are rapidly expanding in the market and have increased the number of deployments in various embedded fields. On the other hand, the requirement of AI performance also increases image-based AI inference (hereafter, “Vision AI”) due to the rapidly evolving neural network itself. With this trend, the issue of heat generation by power consumption becomes apparent.

In this article, I will introduce the solution to the biggest challenge in AI inference, heat generation, which you have already faced or will face.

As you know better than I do, when the heat generation is increased, you need to make countermeasures to install a heat sink and fan, or a bigger casing to stop increasing the temperature in the case. But these countermeasures are one of the reasons your product becomes expensive and bigger.

Also, when your system operates for a long time, thermal throttling* and other heat-oriented problems may occur. As a result, the performance of AI inference becomes unstable.

* This is a function of when the chip temperature becomes high, it reduces the clock frequency of the CPU or AI accelerator.

Based on the above, we defined all necessary conditions for high-loaded endpoint Vision AI which are "practical AI performance", "low power consumption" and "stable AI inference performance".

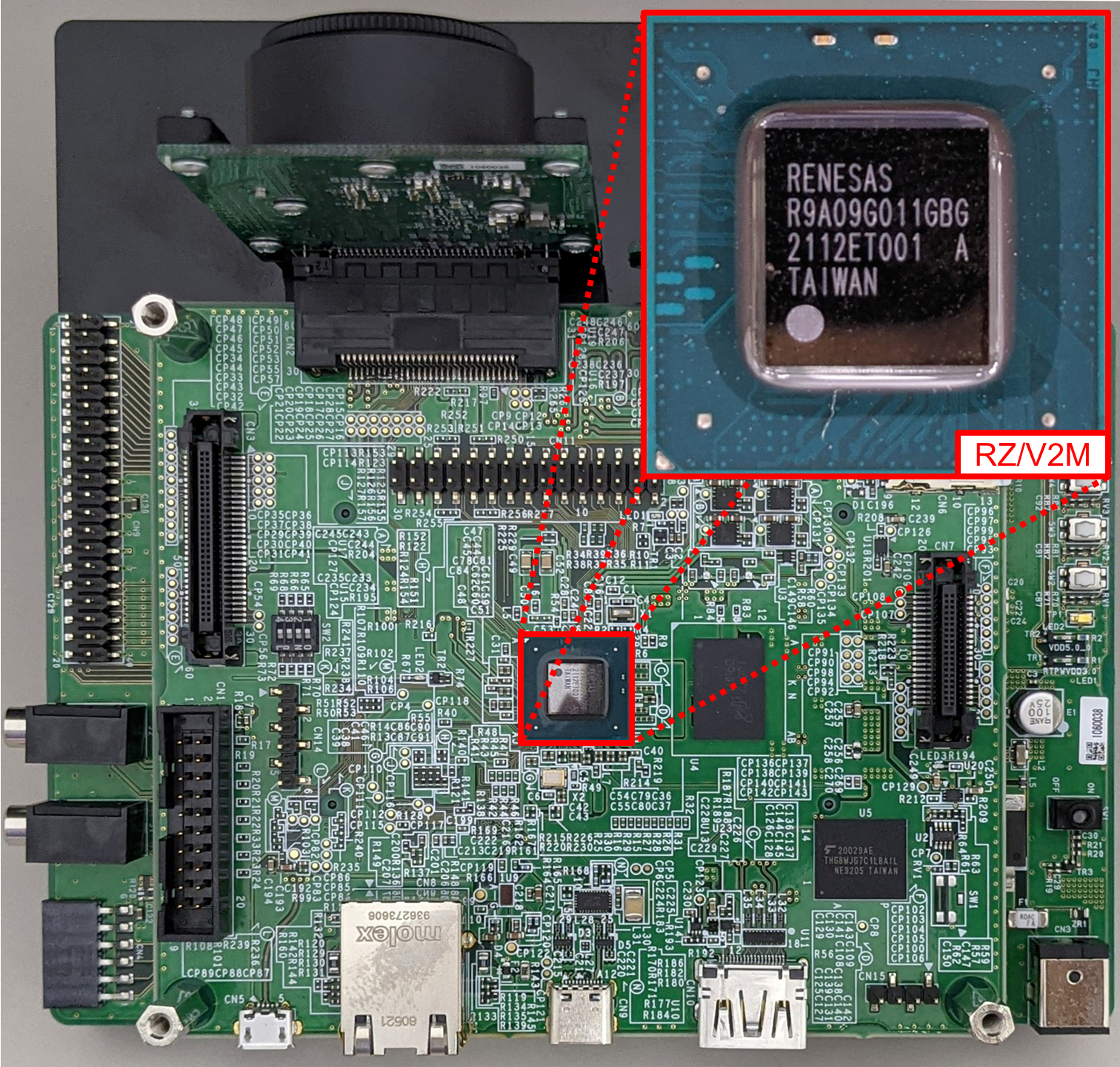

Renesas released the RZ/V series of MPUs to solve these conditions. The RZ/V series utilizes our technology and know-how that has supported the embedded market for a long time. I will introduce the “RZ/V2M” which is the first product of the RZ/V series, in terms of AI inference.

What is RZ/V2M?

RZ/V2M has DRP-AI (Dynamically Reconfigurable Processor for AI) as the key technology to accelerate AI inference, and it has various peripheral functions. In addition, RZ/V2M achieved less than 4W* power consumption during AI inference by applying various power consumption measures.

* Actual power consumption will depend on the customer's usage conditions.

These are the main features of RZ/V2M.

* Tuned ISP has optimized the parameters by experts for Renesas selected sensors.

RZ/V2M is being adopted in applications such as surveillance cameras, industrial cameras, marketing cameras, gateways, robots, and medical equipment using AI inference, and these products will be shipped to the market soon.

“DRP-AI”: Accelerator for AI inference

The most important feature of DRP-AI is all the processing for AI inference can be performed with DRP-AI alone. DRP-AI consists of the following two blocks, each of which performs AI inference through cooperative operation without a CPU.

- DRP:

Processing for except convolution layer which requires various processing using reconfigurable technology. Also, DRP can be treated pre-processing such as resizing before AI inference. - AI-MAC:

Processing for the convolution layer which requires simple calculation with a large amount of processing

The benefit of this structure is it does not use a CPU or other hardware IP. Stable AI inference can be realized even if another process is working at the same time. For more technical information on DRP-AI, please refer to “DRP-AI white paper”.

Performance of RZ/V2M

In general, the performance of AI inference indicates as “TOPS”. However, this index does not include the factor such as power consumption, so it is difficult to consider the total system using this index. Therefore, Renesas introduces the performance as system point of view. I will introduce AI performance and the whole system’s power consumption in this blog.

The “system” includes AI required functions such as CMOS input and ISP function. It also includes the power consumption of the RZ/V2M and external devices being put on the evaluation board.

This table shows the result.

| RZ/V2M MobileNet V2 | RZ/V2M YOLOv3-tiny | Other Company’s YOLOv3-tiny (Reference) | |

|---|---|---|---|

| AI performance (fps) | 55fps | 52fps | 46fps |

| Power consumption | 2.6W | 3.0W | 9.8W |

| Power efficiency | 21.3fps/W | 17.2fps/W | 4.7fps/W |

As this result, the RZ/V2M achieved better AI performance with only 30% power consumption from Other Company’s one. We had been introduced to customers the index of power efficiency (fps/W) is also achieved almost 3.5 times.

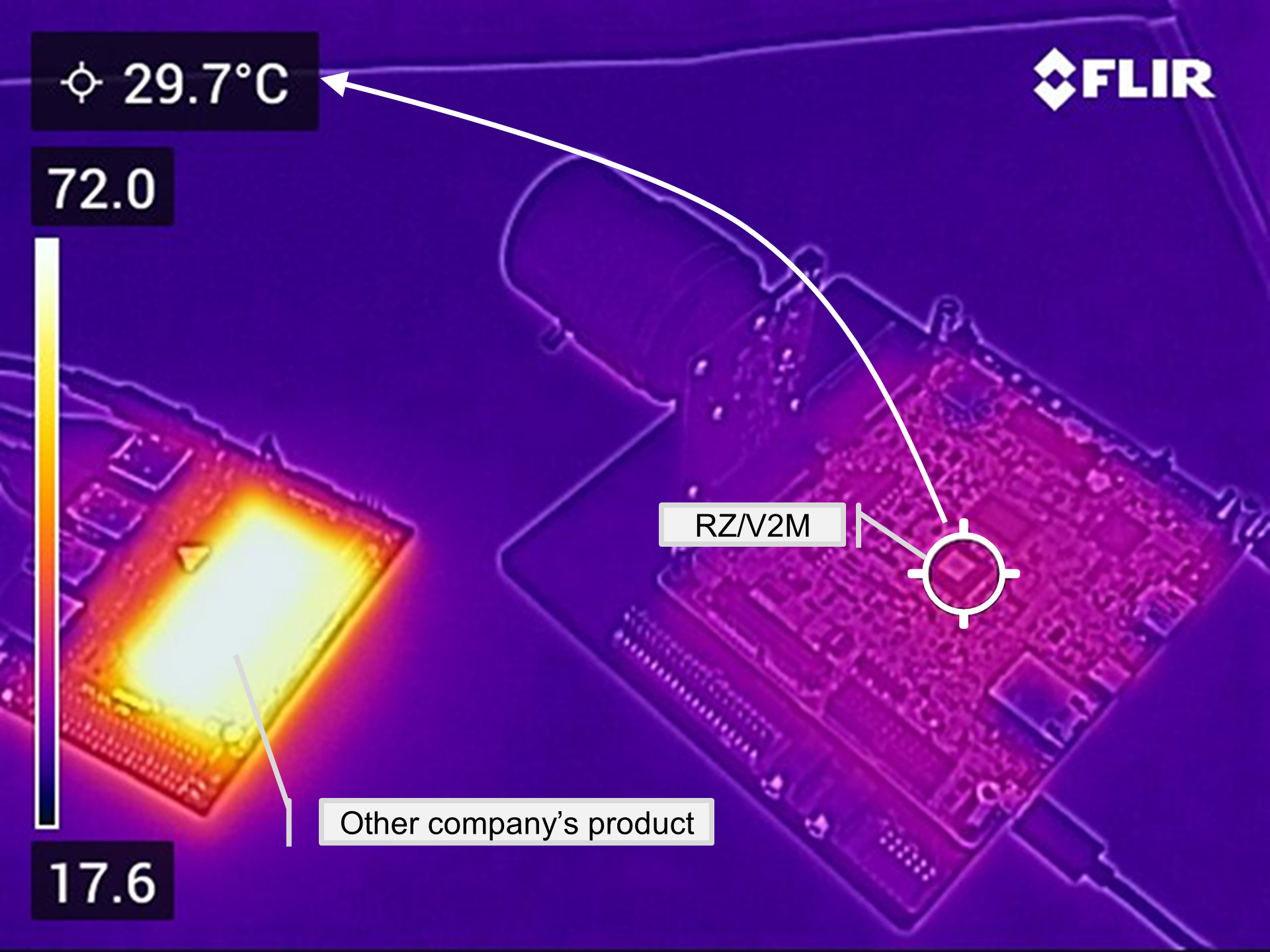

The following photo shows the appearance and surface temperature of the evaluation board that we measured.

The photo on the left shows the appearance of the evaluation board. As you can see, the heatsink is not mounted.

The photo on the right shows the surface temperature after running AI inference for about 30 minutes. Since the surface temperatures of the chip is only 30 °C, it is easy to understand why the RZ/V2M does not require any cooling components. Also, the board made by Other Company reaches around 75 °C even with heatsinks to dissipate the heat.

Summary

We often hear customers use GPUs and FPGAs for initial evaluation because it is easy to start evaluating. On the other hand, we also heard from customers “it is difficult to use these chips for mass production due to their high-power consumption and heat generation”.

In fact, we have received the following feedback from customers who have selected the RZ/V2M:

- "RZ/V2M is an impressive device! It achieves almost the same AI performance with only less than 25% power consumption of an FPGA."

- "We are deeply impressed with the performance of DRP-AI and have high hopes for equipment AI into our products."

I have confident that you can understand RZ/V2M is the most suitable chip for endpoint Vision AI from this blog.

Related information:

1. e-AI: endpoint AI proposed by Renesas

2. RZ/V2M: Mid-range product for camera applications with high-performance ISP in addition to DRP-AI

3. RZ/V2L: Entry-level product group equipped with the same DRP-AI as RZ/V2M

4. DRP-AI, DRP-AI White Paper: Technical information for DRP-AI