エッジ及びエンドポイントでの高機能化と知能化に対する需要が高まるにつれて、これらのデバイスにおける処理能力と大容量メモリの増加に対するニーズも高まっています。 アプリケーションはより多機能になり、ユーザーは、製品に対して洗練されたグラフィックスやユーザーインターフェース、学習アルゴリズム、ネットワーク接続、高度なセキュリティを求めています。 最近では、機械学習により、音声およびビジョンAI機能が可能になり、デバイスがエッジで知能的な意思決定を行い、人間の介入なしにアクションを実行できるようになりました。 これらのソリューションには、高度なソフトウェアフレームワークが不可欠です。

これらのアプリケーションには処理能力が重要となりますが、コードやデータのための高速で信頼性が高く、低消費電力で不揮発性のストレージも必要です。 組込みシステム開発において、設計者が選択するメモリは、性能、コスト、設計の複雑さ、消費電力に大きな影響を与える可能性があります。 コストを低く抑えながら、高性能、大容量メモリ、低消費電力という進化する要件に対応するためには、新しいアーキテクチャが必要です。 メモリメーカーとの緊密な協力関係は、性能要件を満たす完全に検証されたソリューションを確保するために必要です。 さまざまなメモリアーキテクチャオプションと、それらに最適なユースケースを見てみましょう。

内部フラッシュまたは外部メモリ

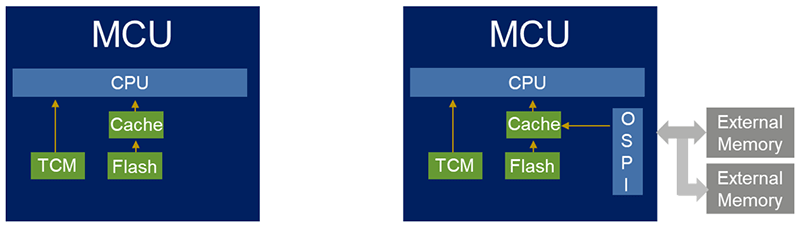

従来、低から中程度の性能のアプリケーションで使用される汎用 MCU には、コード用の不揮発性ストレージとして組み込みフラッシュ (通常は2MB未満) が採用されていました。 これらの統合MCUは、産業やビルオートメーション、医療、家電製品、スマートホームなど、多様な市場セグメントで低から中程度の性能を必要とするほとんどのIoTアプリケーションに非常に適しています。 組み込みフラッシュには、低遅延、低消費電力、高性能など多くの利点があり、よりシンプルでスペースに制約のあるアプリケーションや、設計の複雑さを軽減するのに適した統合ソリューションを提供します。 セキュリティの観点からは、追加の攻撃対象領域を排除します。 そのため、フラッシュが組み込まれたMCUは、性能と機能のニーズが低いほとんどのアプリケーションにとって、依然として好ましいソリューションです。

しかし、組み込みフラッシュメモリにはコストがかかり、特定のメモリ密度(2MBなど)を超えると、コストが高くなりすぎる傾向がある。 組み込みフラッシュは高価であり、MCUウェーハ処理の複雑さとコストを増加させます。 フラッシュを埋め込むために必要な追加の製造ステップは、シリコンのコストを大幅に増加させます。

さらに、MCUメーカーは、はるかに高い性能を実現し、より多くの機能をオンチップに統合するために、28/22nm以下のより高度で微細なプロセス形状に移行しつつあります。 現在では、400MHzから1GHzの範囲の速度を持つMCUが一般的であり、高度なグラフィックス、アナログ機能、コネクティビティ、安全機能、およびデータ/IPの盗難や改ざんから保護するための高度なセキュリティをサポートしています。 これにより性能のニーズには対応できますが、組み込みフラッシュは低いプロセス技術では拡張性が低く、フラッシュセルが40nm未満では効率的に縮小しないため、これらのデバイスへのメモリの組み込みは課題となります。 これらの要因が重なることで、低プロセス技術では実現性が低く、コストも高くなります。

外部メモリ

設計者は、このギャップを埋めるために新しいアーキテクチャを検討しており、より要求の厳しい高性能アプリケーションに対しては外部フラッシュを使用する傾向が強まっています。 高性能グラフィックス、オーディオ処理、機械学習など、より複雑なユースケースをサポートする必要性がこの需要を促進し、スタンドアロンメモリの価格が急激に低下したことで、この傾向を加速させています。 外部フラッシュは基本的にMCUメモリマップの一部であり、直接読み取ってデータログやソフトウェアの保存と実行に使用できるため、外部メモリを使用することで、組み込みシステムのコードとデータ領域を拡張することができます。

IoT製品メーカーにとって、高性能アプリケーション向けに外部フラッシュに移行することは、メモリサイズ選択の柔軟性や設計の将来性を確保するなど、いくつかの利点をもたらします。 開発者が、より大きなメモリ フットプリントを必要とする機能を追加する際に、ピン互換の小容量メモリを大容量メモリに置き換えるのは比較的簡単な作業です。 また、様々な設計に対して統一されたプラットフォームアプローチを取ることもできます。

もちろん、外部メモリへのアクセスに伴う追加の遅延 (Quad/Octal メモリとキャッシュの慎重な使用によって軽減)、わずかに高い消費電力、外部メモリによる追加コストなどの欠点もあります。 また、基板設計の複雑さが増し、PCB上での追加配線と信号の整合性に注意を払う必要があります。

MCUメーカーは、MCUをメモリから分離することで、より高度なプロセスノードに移行するため、より高性能、高機能、および高い電力効率を実現し、デバイスコストを削減できます。 現在、ほとんどのメーカーは、これらのNORフラッシュデバイスとのシームレスなインターフェースを可能にするために、Execute-in-Place(XiP)機能をサポートするQuadまたはOctal SPIインターフェースを組み込んでいます。 一部のMCUは、暗号化された画像を外部フラッシュに保存し、実行時に安全に取り込むことを可能にするDecryption-On-the-Fly(DOTF)をサポートしています。 このソリューションは、現代のエッジアプリケーションに必要な高性能、低消費電力、高度なセキュリティを提供します。

外部メモリと軽減策の課題

もちろん、外部メモリには、外部フラッシュに関連する遅延や、それが全体的な性能へ与える影響が懸念されます。 この帯域幅制限は、通常のSPIの単一ラインではなく、4つまたは8つの並列ラインでデータを転送できるQuadまたはOctal SPIインターフェイスを使用することで、ある程度緩和することができます。 ダブル・データ・レート(DDR)を使用し、クロックの立ち上がりエッジと立ち下がりエッジの両方でデータを送信することで、このスループットを2倍にすることができます。 外部フラッシュメモリメーカーは、高速なデータアクセスのためにバースト読み取りモードをサポートしています。キャッシュを使用して遅延の影響を軽減できますが、キャッシュを最適に使用するにはソフトウェアの慎重な管理が必要です。 また、システム設計者は、コードを内部SRAMに転送し、SRAMから実行することで最高のパフォーマンスを実現しながら、遅延の問題を軽減することもできます。

また、外部フラッシュメモリの使用に伴う消費電力の増加も懸念されるため、メモリメーカーはこれらのデバイスの消費電流を最適化することに特に注意を払っています。 外部メモリを使用すると、攻撃対象領域が増えるため、セキュリティリスクが伴います。これにより、ハッカーに悪用される可能性のある脆弱性が生じるため、保護する必要があります。 これにより、MCUメーカーが暗号化されたコードを安全に保存し、取り込むことができるように、Quad/Octal SPIインターフェースに暗号化/復号化機能を追加する必要があります。

RA MCUでの外部フラッシュの使用

ルネサスのRAファミリは、組み込みフラッシュメモリと複数のメモリインタフェースを外部メモリに統合しており、最大限の柔軟性とパフォーマンスを提供します。 これらは、SPIインターフェースを備えた通常のデータラインの代わりに、4つまたは8つのデータラインを使用することで、より高いデータスループットを実現するQuadまたはOctal SPIインターフェースをサポートします。 これにより、特にグラフィックス、オーディオ、データロギングなどの高速メモリアクセスを必要とするアプリケーションで、パフォーマンスを大幅に向上させることができます。

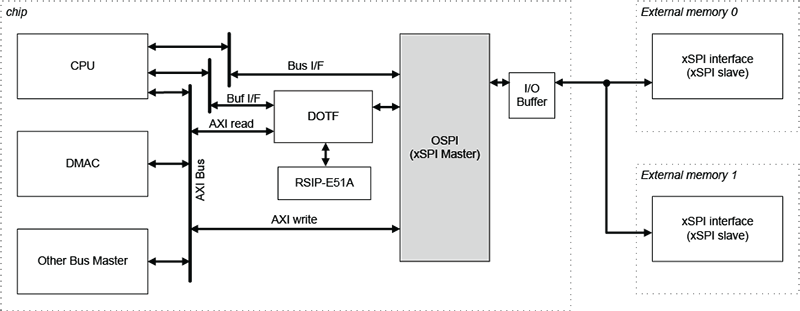

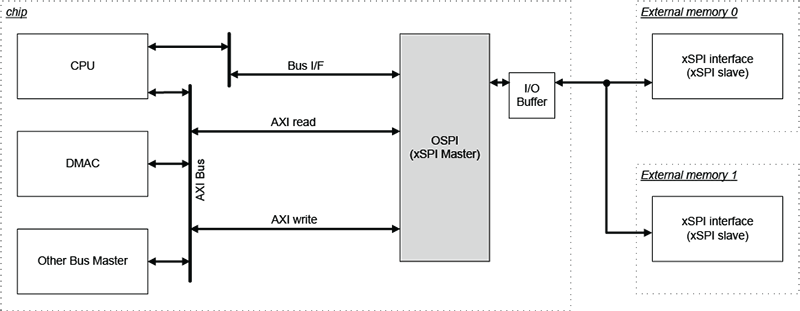

RA8シリーズはすべて、eXpanded Serial Peripheral Interface(xSPI)準拠のOctal SPIインタフェースを搭載しています。 xSPIプロトコルは、不揮発性メモリデバイスへのインターフェースを規定しており、高いデータスループット、低い信号数、および従来のSPIデバイスとの限定的な互換性を実現します。 以下に示すように、チップセレクトを使用して2つの外部メモリデバイスをOctal SPIインターフェースに接続できるため、設計者により大きな柔軟性を提供します。 RA8 MCUの一部は、外部フラッシュに保存され暗号化された画像を安全に取り込んで実行することができるDecryption-On-the-Fly(DOTF)もサポートしています。 図2と図3は、RA8 MCUのOctal SPIインターフェースをDOTFありとなしの場合で示しています。

Octal SPIインターフェースのいくつかの機能:

- プロトコル – xSPI、準拠

- JEDEC規格JESD251(プロファイル1.0および2.0)

- JESD251-1 と JESD252

- サポートされているメモリタイプ – OctalフラッシュとOctal RAM、HyperFlash/RAM

- データスループット – 最大200MB/秒

- データ送受信 - チップセレクトを使用して最大2つのスレーブと通信できますが、同時には通信できません。

- Exe-in-Place (XiP) 操作をサポート

- サポートされているモード

- SDR/DDR 付き 1/4/8 ピン (1S-1S-1S、4S-4D-4D、8D-8D-8D)

- SDR付き2/4ピン(1S-2S-2S、2S-2S-2S、1S-4S-4S、4S-4S-4S)

- メモリマッピング

- 各CSで最大256MBのアドレス空間をサポート

- 低遅延でバーストリードを行うためのプリフェッチ機能

- 高スループットのバースト書き込みに対応する優れたバッファ

- セキュリティ – DOTFのサポート(一部のRA8 MCUで利用可能)

RA8 MCUには、内部フラッシュおよび外部メモリインターフェイスに加えて、パフォーマンスの最適化に役立つ密結合メモリ(TCM)およびI/Dキャッシュも含まれています。 TCM はゼロウェイトステートメモリであり、すべてのオンチップメモリの中で最も低遅延となります。これは、コードの最も重要な部分に使用できます。

RA8シリーズに搭載されている様々なメモリとメモリインタフェースを使用して、複数の柔軟なメモリ構成が可能です。 コードは内部の組み込みフラッシュに格納され、そこから実行できるため、シンプルで低遅延、高セキュリティ、電力効率の高いシステムを実現できます。 ただし、これはスケーラブルなソリューションではなく、組み込みフラッシュの容量を超えるコードサイズの増加には、外部メモリで対応する必要があります。

2 番目の構成では、コードは外部フラッシュに格納され、そこから実行されます。 これは XiP 機能であり、最も柔軟でスケーラブルなオプションです。 外部メモリは、コードサイズが大きくなるにつれて、ピン互換の高密度デバイスに交換するだけなので、ボードを再設計することなく簡単にアップグレードできます。 これには、消費電力の増加や遅延が大きくなるという代償が伴い、全体的な性能に影響を与える可能性があります。

また、コードを外部フラッシュに格納し、内部SRAMまたはTCMに取り込んで実行することもできます。 これにより、コードが高速 SRAM から実行されるため、最高のパフォーマンスが得れますが、SRAM サイズに基づくコード サイズに制限があり、コード サイズが使用可能な SRAMや TCM を超えるとソフトウェアの複雑さが増します。 SRAMがシャットダウンされるとコードが失われるため、各電源サイクルごとに再ロードする必要があり、ウェイクアップ時間も増加する可能性があります。

まとめ

メモリオプションの選択に関しては、単一の正解はありません。 ほとんどの低から中程度の性能を必要とするIoTアプリケーションでは内部フラッシュを使用できますが、多くの高性能アプリケーションでは外部フラッシュの使用が必要になります。 メモリの選択は、アプリケーションの要件、必要なメモリサイズ、期待される性能、システムアーキテクチャ、消費電力の目標、セキュリティ上の懸念事項、将来の製品/プラットフォーム計画など、いくつかの考慮事項に基づいて行う必要があります。

どのオプションにも、利点と欠点があります。

内部フラッシュ

- シンプルさを提供

- 緊密に統合

- 安全性の高いソリューション

- 幅広いアプリケーションに対応

外部フラッシュ

- 柔軟性

- メモリ拡張

- スケーラビリティ

- 新しいIoTおよびエッジAIアプリケーションにとって最適

これらのトレードオフを理解することで、開発者はプロジェクトの目標に沿った情報に基づいた意思決定を行うことができます。

RA8シリーズの各デバイス製品ページで、柔軟なメモリオプションの詳細、データシート、サンプル、および評価キットをご確認ください。